NIST drafts privacy protection guidance for AI-driven research

Tasked under the Biden Administration’s Executive Order on AI Offers, the U.S. National Institute of Standards and Technology released draft guidance on evaluating data privacy protection for use with artificial intelligence on Monday.

WHY IT MATTERS

NIST announced the new guidelines on differential privacy guarantees and stated on its website that its goal is to help data-centric organizations strike a balance between privacy and accuracy.

“Differential privacy is one of the more mature privacy-enhancing technologies used in data analytics, but a lack of standards can make it difficult to employ effectively — potentially creating a barrier for users,” the agency said in the announcement.

In the explainer, NIST proposed a “tricky situation” – health researchers would like to access consumer fitness tracker data to help improve medical diagnostics.

“How do the researchers obtain useful and accurate information that could benefit society while also keeping individual privacy intact?”

NIST said the Draft NIST Special Publication (SP) 800-226, Guidelines for Evaluating Differential Privacy Guarantees is designed for federal agencies, as mandated under the order, but it’s also a resource for software developers, business owners and policymakers to “understand and think more consistently about claims made about differential privacy.”

The algorithm was spawned as part of last year’s Privacy-Enhancing Technologies Prize Challenge, which had a combined U.S.-U.K. prize pool of $1.6 million for the use of federated learning to generate novel cryptography to keep data encrypted during AI model training.

PETs can be used in novel cryptography and to address money laundering and predict locations of public health emergencies. More than 70 solutions were put through Red Team attacks to see if the raw data could be protected.

“Privacy-enhancing technologies are the only way to solve the quandary of how to harness the value of data while protecting people’s privacy,” Arati Prabhakar, assistant to the President for science and technology and director of the White House Office of Science and Technology Policy, said in March in a White House announcement about the winners.

However, differential privacy, which uses either a central or multiple aggregators to add noise according to Damien Desfontaines staff scientist at differential privacy firm Tumult Labs on his personal blog, is still maturing.

There are risks, according to Naomi Lefkovitz, manager of NIST’s Privacy Engineering Program and an editor on the draft.

“We want this publication to help organizations evaluate differential privacy products and get a better sense of whether their creators’ claims are accurate,” she said in NIST’s guidelines announcement.

While the competition revealed “that differential privacy is the best method we know of for providing robust privacy protection against attacks after the model is trained,” Lefkovitz said.

“It won’t prevent all types of attacks, but it can add a layer of defense.”

This puts the onus on developers to evaluate a real-world guarantee of privacy requires.

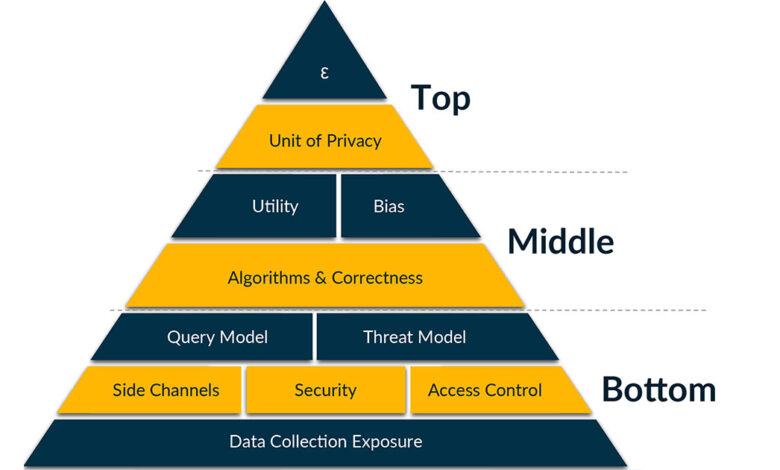

To properly evaluate a claim of differential privacy, they need to understand multiple factors, which NIST identified and organized in a “differential privacy pyramid.” The top level contains the most direct measures of privacy guarantees while the middle level contains factors that can undermine a differential privacy guarantee and the bottom level is composed of underlying factors, such as the data collection process.

NIST is requesting public comments through January 25, 2024 and a final version is expected to be published later next year.

THE LARGER TREND

Powerful AI models built with quantum computing widen the attack surface for organizations that house great amounts of data and protected data, like healthcare. Any use of encrypted protected health information could be vulnerable to such an attack.

In September, NIST released draft algorithms for quantum-resistant cryptography, requesting feedback on standards for three algorithms designed to withstand quantum-powered cyberattacks, which were due just before Thanksgiving.

In the not-too-far-off future, quantum computers could crack binary encryption rapidly.

“The devil is in the details,” said Dan Draper, founder and CEO of CipherStash.

Protecting data that relies on public key cryptography for data security is of primary concern, he explained in a look ahead on data encryption trends in 2024.

“There are organizations that are capturing lots and lots of encrypted traffic – secure messages, secure Zoom calls,” to store now for future nefarious uses.

Draper told Healthcare IT News last month that while the final NIST quantum-safe public key cryptography standards are still being finalized, “it’s looking very promising” in their ability to defend against quantum attacks.

He also noted that despite the progress, a Y2K-like race to update software for quantum safety is looming.

“We’re going to have to rush to get that updated quickly,” he said.

ON THE RECORD

“We show the math that’s involved, but we are trying to focus on making the document accessible,” Lefkovitz said in the announcement. “We don’t want you to have to be a math expert to use differential privacy effectively.”

Andrea Fox is senior editor of Healthcare IT News.

Email: afox@himss.org

Healthcare IT News is a HIMSS Media publication.

Source link